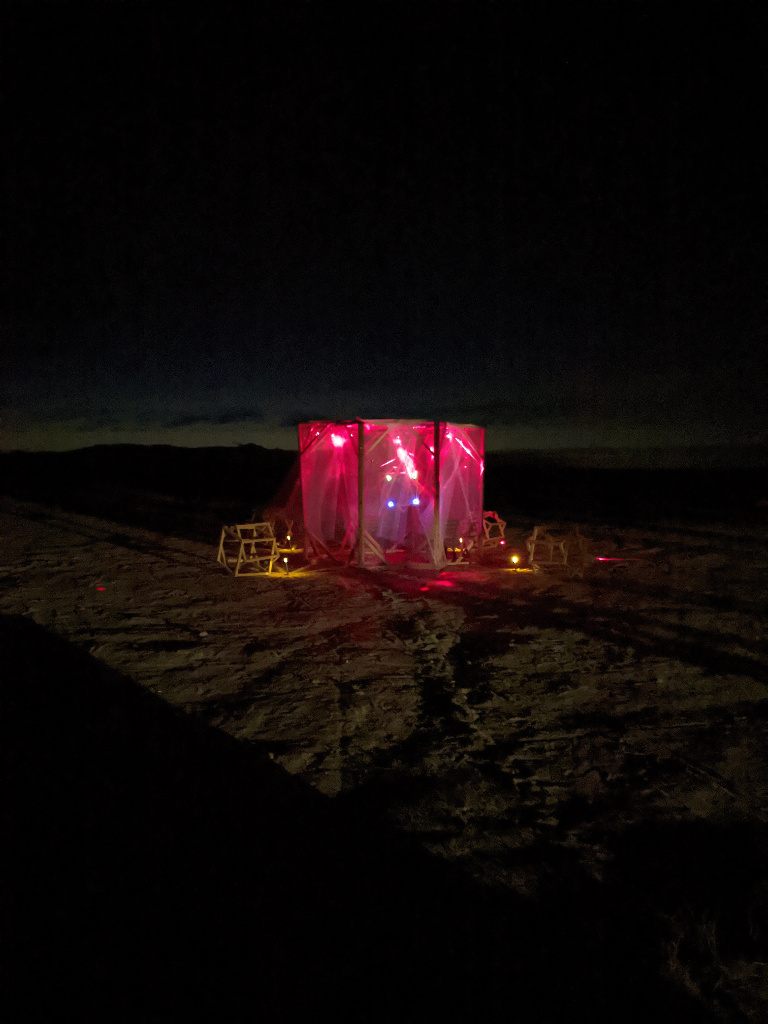

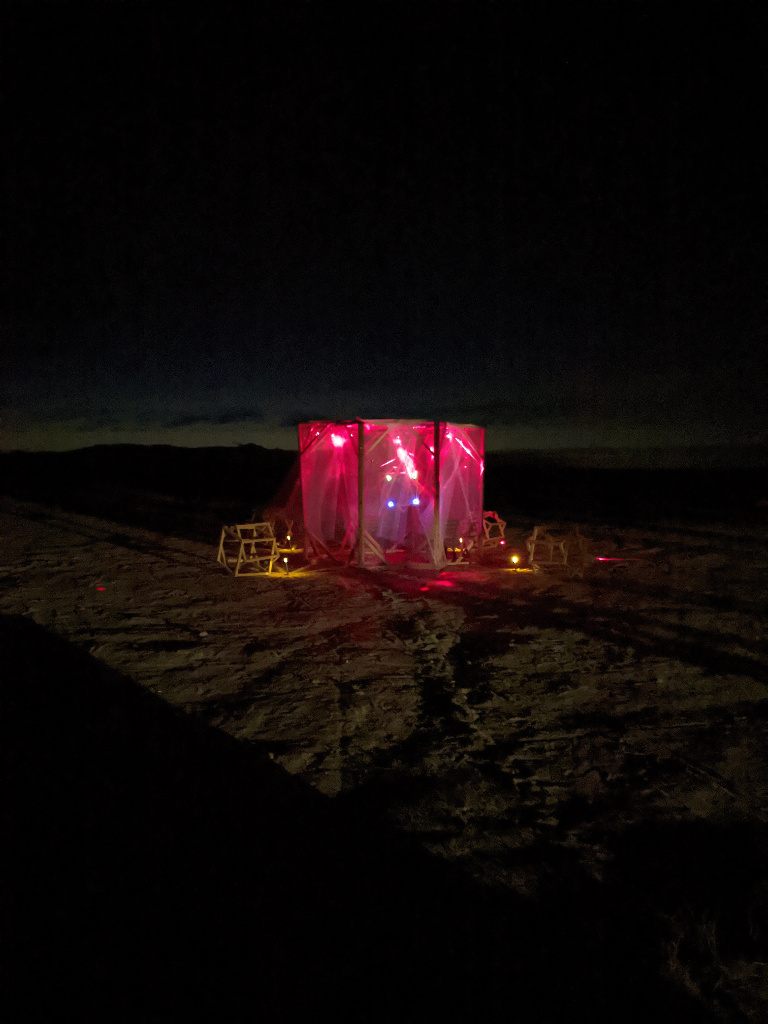

Karaoke of Dreams is a generative karaoke installation developed in collaboration with Sofy Yuditskaya and Sophia Sun while at residency at Bombay Beach, CA as part of brahman.ai. The tesseract strucutre with laser and crystals and draped with mosquito netting was designed and built by Sofy Yuditskaya while the lyrics and MIDI files were generated by Sophia Sun using GPT2 fine-tuned on pop song lyrics and MuseGAN for MIDI generation. Additional visuals projected on the structure were generated by Sophia by AttnGAN and curated by Sofy. I was responsible for interpreting the MIDI files with Pure Data and developing a web interface for selecting songs and displaying lyrics using Node.js and React. All the lyrical and musical content for the installation is pregenerated.

The web interface was developed in React and communicated with the Node/Express server running on the Raspberry Pi via WebSockets. Once a song is selected, the web interface signals the server to load the selected lyrics line by line and the server signals Pure Data via OSC to load the selected MIDI and signals the web interface that a song is ready to be played and hide the song selection screen to show the lyrics interface. Pure Data is responsible for signalling the server which lyrics line to display and the server in turn sends the proper line to display on the web interface. A "stop" button is present on the lyrics interface to stop playback and return everything to the song selection state.

I used the Pure Data external library Cyclone to load the midi files and fed the note events into frequency modulation synth abstractions and synthesized drums. These synths were both fed into effects banks consisting of distortion, bitcrushing, delay with feedback, chorus, phasing, flanging, and reverb. Every time a song is selected, several parameters affecting song playback are randomly selected. The playback tempo is allowed to fall in between 80 and 160 bpm (each MIDI file defaults to 120 bpm) which adjusts the lyrics default rate of 4.8 seconds per line. The amount of phaser, chorus, and flanger as well as parameters affecting the FM synths (ADSR envelope, note lengths, modulation amount) are also randomized. Additionally, several spots are chosen in the song to increase "tension" through adding distortion, bitcrushing, reverb, and delay in randomly-chosen proportions.

We presented our work at the 2020 Joint Conference on AI Music Creativity (CSMC/MuME). Our paper for the conference can be found here and here is a video of our lecture edited together by Sofy: